安装方法

kubernetes 二进制安装 (配置最繁琐,不亚于安装openstack)

kubeadm 安装 (谷歌推出的自动化安装工具,网络有要求)

minikube 安装 (仅仅用来体验k8s)

yum 安装 (最简单,版本比较低====学习推荐此种方法)

go编译安装 (最难)

基本环境说明

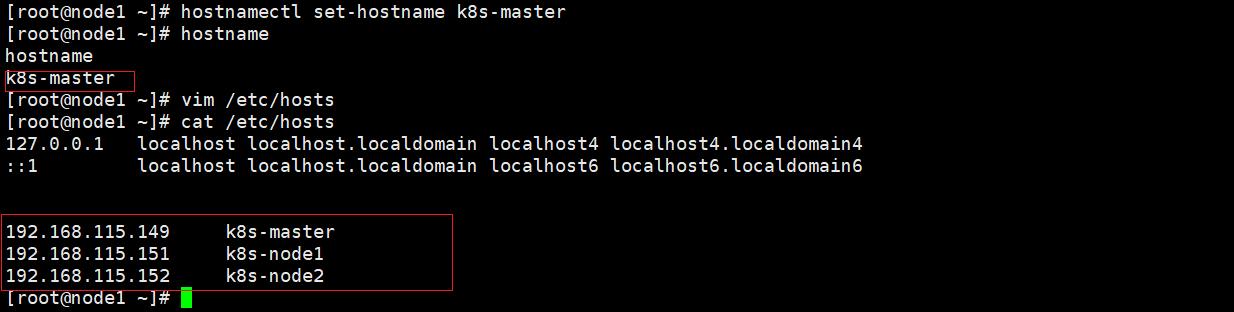

ip:192.168.115.149 主机名:node1

ip:192.168.115.151 主机名:node2

ip:192.168.115.152 主机名:node3

准备工作

说明: k8s集群涉及到的3台机器都需要进行准备

1、检查ip和uuid:确保每个节点上 MAC 地址和 product_uuid 的唯一性

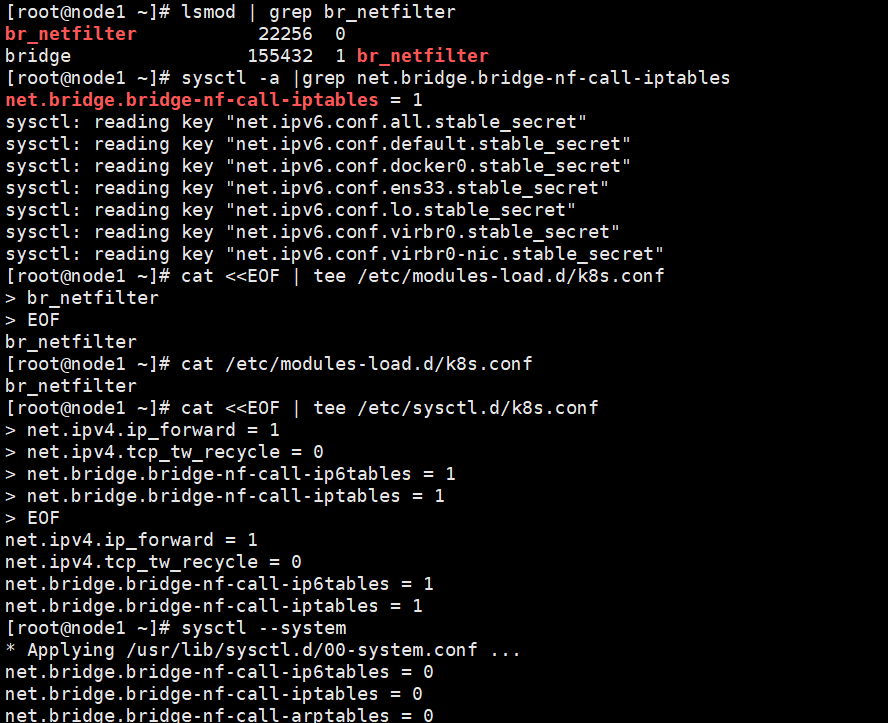

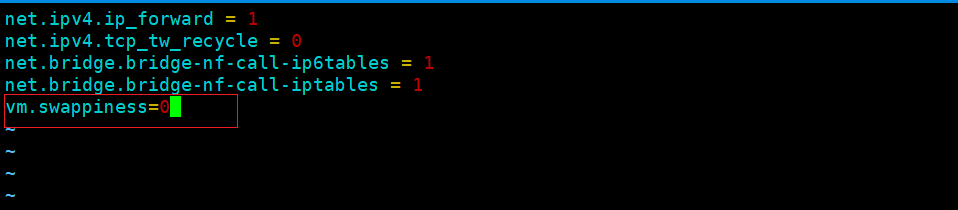

2、允许 iptables 检查桥接流量:确保 br_netfilter 模块被加载、iptables 能够正确地查看桥接流量、设置路由

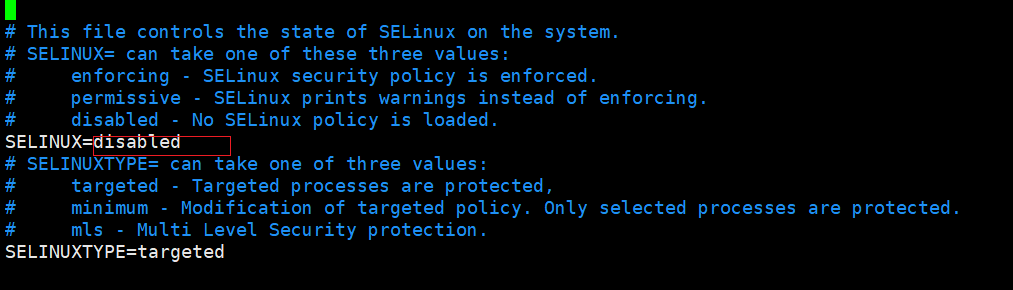

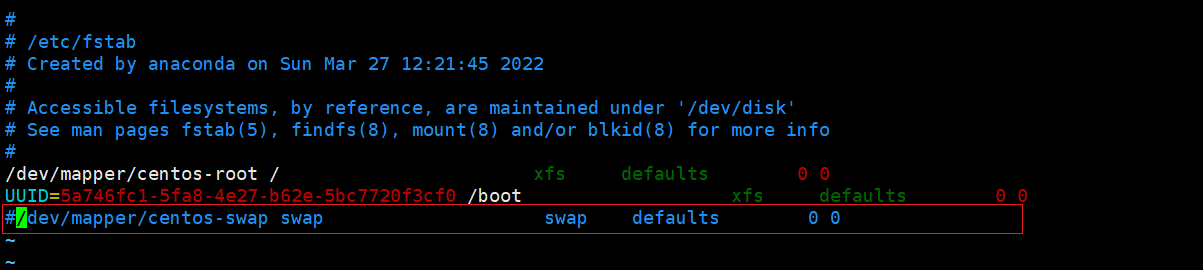

3、关闭系统的selinux、防火墙、Swap

4、修改主机名,添加hosts

5、安装好docker: 注意docker和k8s的版本对应关系,并设置设置cgroup驱动,这里用systemd,否则后续kubeadm init会有相关warning

准备工作

准备工作

yum方式安装部署

(192条消息) k8s搭建部署(超详细)_Anime777的博客-CSDN博客_k8s部署

安装kubeadm,kubelet和kubectl

说明: k8s集群涉及到的3台机器都需要进行准备

#添加k8s阿里云YUM软件源vim /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg#安装kubeadm,kubelet和kubectl,注意和docker版本对应yum install -y kubelet-1.21.1 kubeadm-1.21.1 kubectl-1.21.1#启动,注意master节点systemctl start kubeletsystemctl enable kubeletsystemctl status kubelet

集群部署

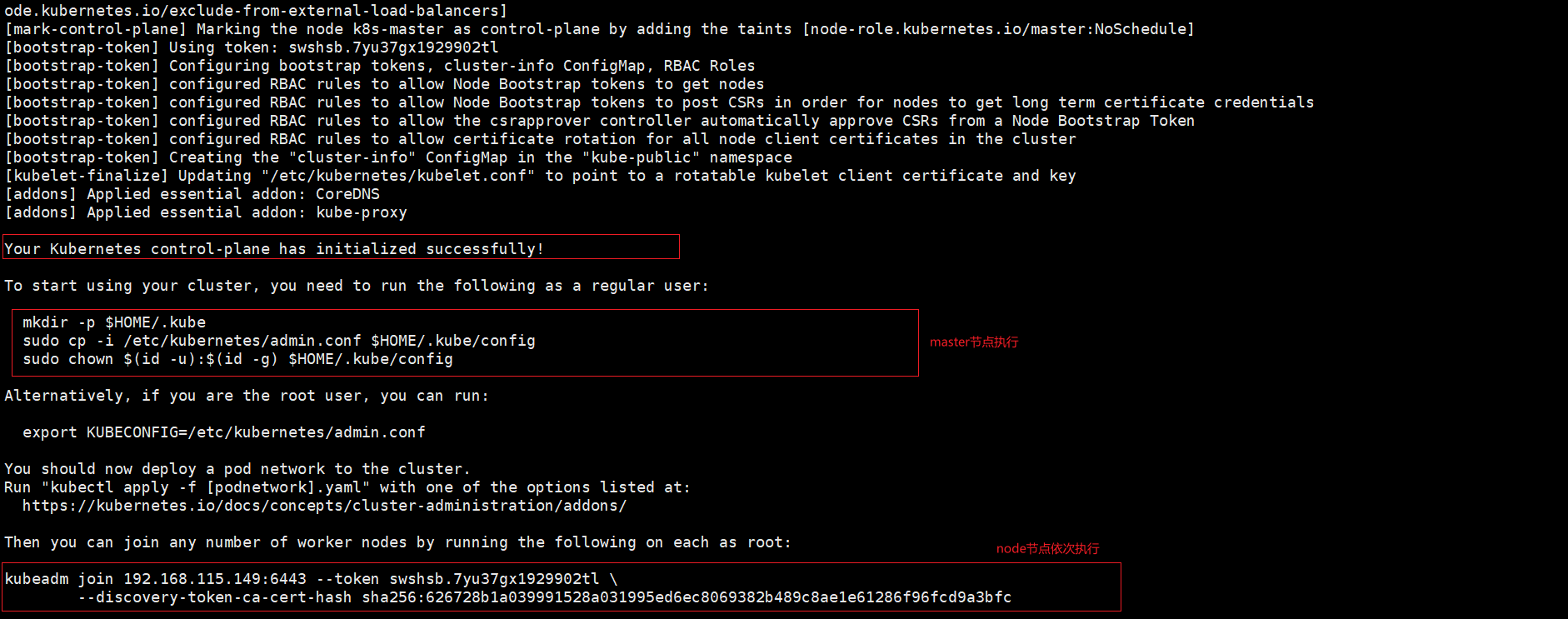

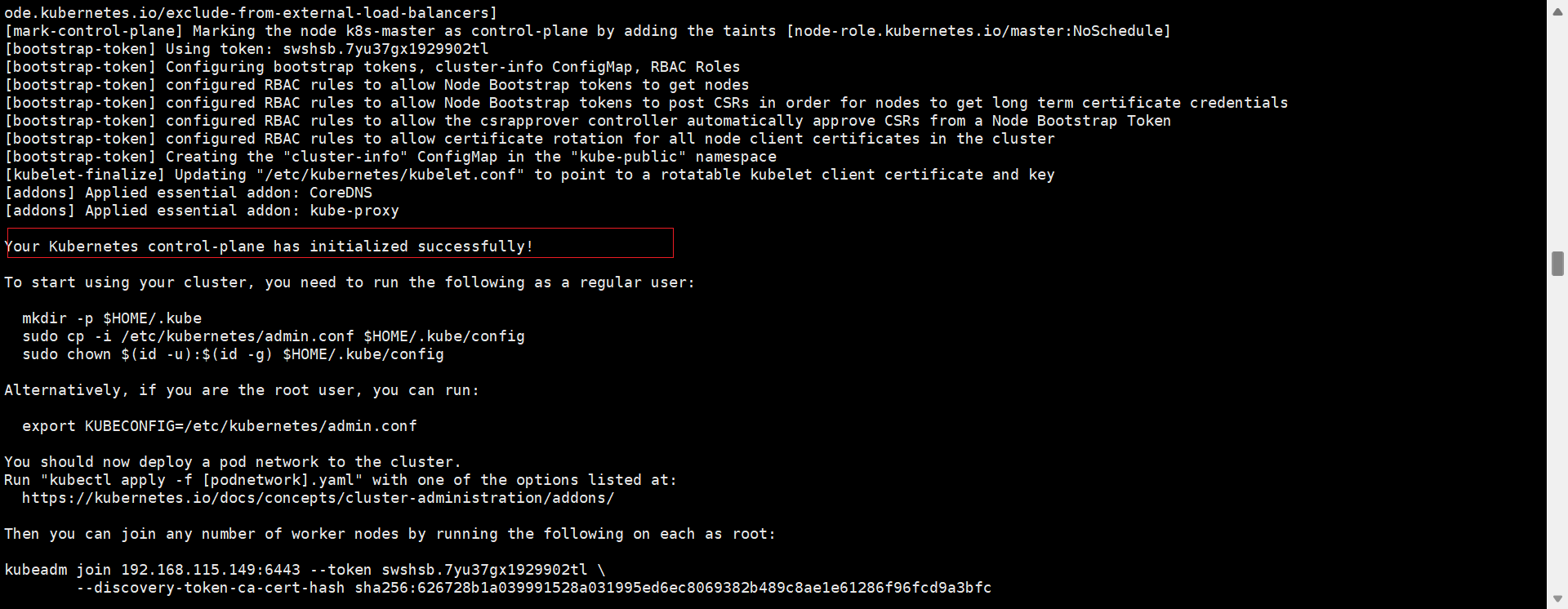

说明: 主要是对master节点初始化和node节点的接入

#master节点部署初始化master节点kubeadm init --apiserver-advertise-address=192.168.115.149 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.21.1 --service-cidr=10.140.0.0/16 --pod-network-cidr=10.240.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

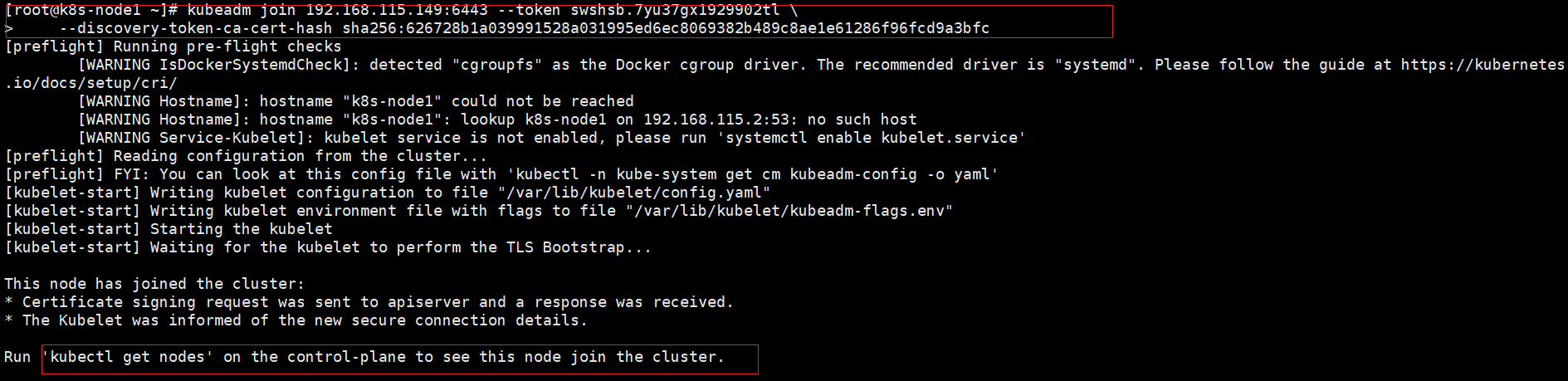

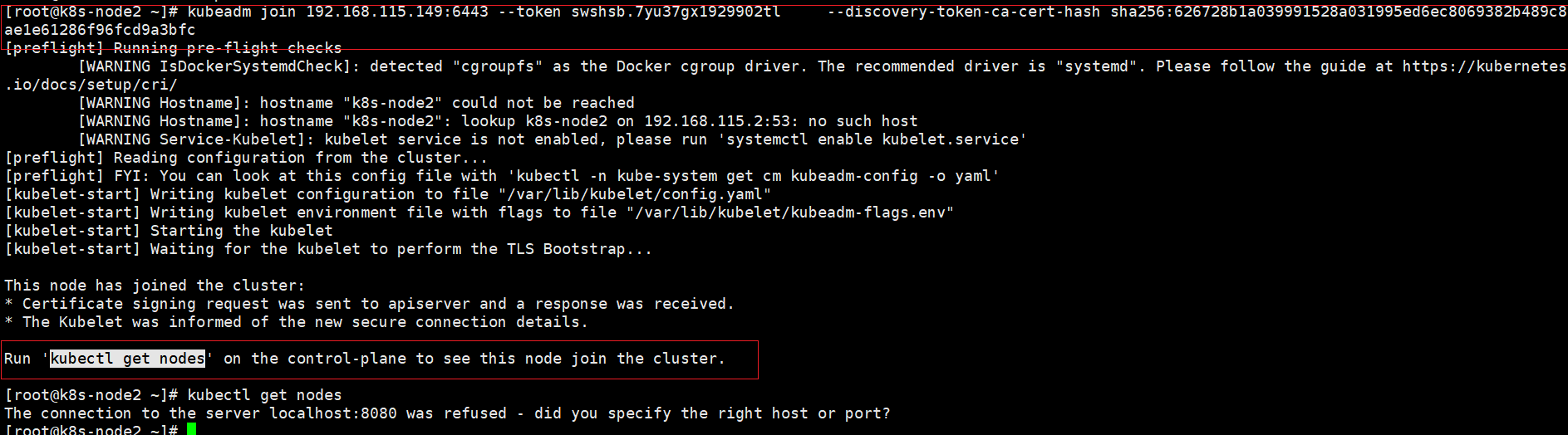

#node节点部署,根据kubeadm init执行成功生成的命令复制到node节点执行

kubeadm join 192.168.115.149:6443 –token swshsb.7yu37gx1929902tl \

--discovery-token-ca-cert-hash sha256:626728b1a039991528a031995ed6ec8069382b489c8ae1e61286f96fcd9a3bfc

#node节点加入后,可在master节点进行查看节点加入情况

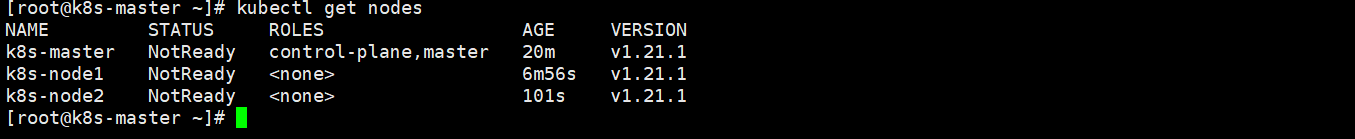

kubectl get nodes

集群部署后查看集群状态的话还不是ready的状态,所以需要安装网络插件来完成k8s的集群创建的最后一步

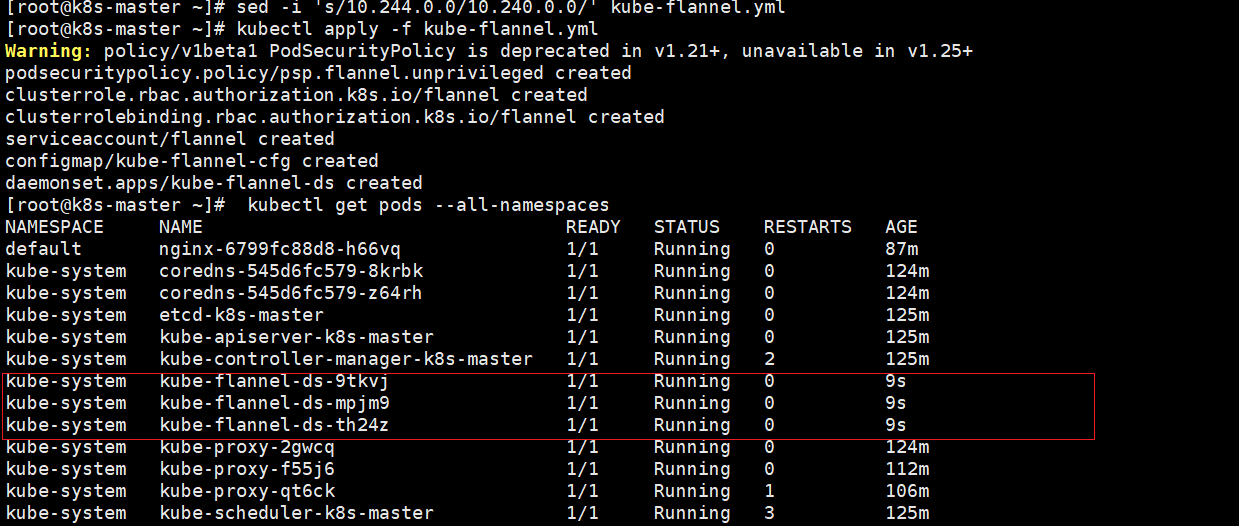

安装网络插件

说明:master节点安装,可安装flannel插件也可安装安装calico插件,此处安装flannel插件

1 vim kube-flannel.yml 2 --- 3 apiVersion: policy/v1beta1 4 kind: PodSecurityPolicy 5 metadata: 6 name: psp.flannel.unprivileged 7 annotations: 8 seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default 9 seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default 10 apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default 11 apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default 12 spec: 13 privileged: false 14 volumes: 15 - configMap 16 - secret 17 - emptyDir 18 - hostPath 19 allowedHostPaths: 20 - pathPrefix: "/etc/cni/net.d" 21 - pathPrefix: "/etc/kube-flannel" 22 - pathPrefix: "/run/flannel" 23 readOnlyRootFilesystem: false 24 runAsUser: 25 rule: RunAsAny 26 supplementalGroups: 27 rule: RunAsAny 28 fsGroup: 29 rule: RunAsAny 30 allowPrivilegeEscalation: false 31 defaultAllowPrivilegeEscalation: false 32 allowedCapabilities: ['NET_ADMIN', 'NET_RAW'] 33 defaultAddCapabilities: [] 34 requiredDropCapabilities: [] 35 hostPID: false 36 hostIPC: false 37 hostNetwork: true 38 hostPorts: 39 - min: 0 40 max: 65535 41 seLinux: 42 rule: 'RunAsAny' 43 --- 44 kind: ClusterRole 45 apiVersion: rbac.authorization.k8s.io/v1 46 metadata: 47 name: flannel 48 rules: 49 - apiGroups: ['extensions'] 50 resources: ['podsecuritypolicies'] 51 verbs: ['use'] 52 resourceNames: ['psp.flannel.unprivileged'] 53 - apiGroups: 54 - "" 55 resources: 56 - pods 57 verbs: 58 - get 59 - apiGroups: 60 - "" 61 resources: 62 - nodes 63 verbs: 64 - list 65 - watch 66 - apiGroups: 67 - "" 68 resources: 69 - nodes/status 70 verbs: 71 - patch 72 --- 73 kind: ClusterRoleBinding 74 apiVersion: rbac.authorization.k8s.io/v1 75 metadata: 76 name: flannel 77 roleRef: 78 apiGroup: rbac.authorization.k8s.io 79 kind: ClusterRole 80 name: flannel 81 subjects: 82 - kind: ServiceAccount 83 name: flannel 84 namespace: kube-system 85 --- 86 apiVersion: v1 87 kind: ServiceAccount 88 metadata: 89 name: flannel 90 namespace: kube-system 91 --- 92 kind: ConfigMap 93 apiVersion: v1 94 metadata: 95 name: kube-flannel-cfg 96 namespace: kube-system 97 labels: 98 tier: node 99 app: flannel100 data:101 cni-conf.json: |102 {103 "name": "cbr0",104 "cniVersion": "0.3.1",105 "plugins": [106 {107 "type": "flannel",108 "delegate": {109 "hairpinMode": true,110 "isDefaultGateway": true111 }112 },113 {114 "type": "portmap",115 "capabilities": {116 "portMappings": true117 }118 }119 ]120 }121 net-conf.json: |122 {123 "Network": "10.244.0.0/16",124 "Backend": {125 "Type": "vxlan"126 }127 }128 ---129 apiVersion: apps/v1130 kind: DaemonSet131 metadata:132 name: kube-flannel-ds133 namespace: kube-system134 labels:135 tier: node136 app: flannel137 spec:138 selector:139 matchLabels:140 app: flannel141 template:142 metadata:143 labels:144 tier: node145 app: flannel146 spec:147 affinity:148 nodeAffinity:149 requiredDuringSchedulingIgnoredDuringExecution:150 nodeSelectorTerms:151 - matchExpressions:152 - key: kubernetes.io/os153 operator: In154 values:155 - linux156 hostNetwork: true157 priorityClassName: system-node-critical158 tolerations:159 - operator: Exists160 effect: NoSchedule161 serviceAccountName: flannel162 initContainers:163 - name: install-cni-plugin164 image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0165 command:166 - cp167 args:168 - -f169 - /flannel170 - /opt/cni/bin/flannel171 volumeMounts:172 - name: cni-plugin173 mountPath: /opt/cni/bin174 - name: install-cni175 image: rancher/mirrored-flannelcni-flannel:v0.18.1176 command:177 - cp178 args:179 - -f180 - /etc/kube-flannel/cni-conf.json181 - /etc/cni/net.d/10-flannel.conflist182 volumeMounts:183 - name: cni184 mountPath: /etc/cni/net.d185 - name: flannel-cfg186 mountPath: /etc/kube-flannel/187 containers:188 - name: kube-flannel189 image: rancher/mirrored-flannelcni-flannel:v0.18.1190 command:191 - /opt/bin/flanneld192 args:193 - --ip-masq194 - --kube-subnet-mgr195 resources:196 requests:197 cpu: "100m"198 memory: "50Mi"199 limits:200 cpu: "100m"201 memory: "50Mi"202 securityContext:203 privileged: false204 capabilities:205 add: ["NET_ADMIN", "NET_RAW"]206 env:207 - name: POD_NAME208 valueFrom:209 fieldRef:210 fieldPath: metadata.name211 - name: POD_NAMESPACE212 valueFrom:213 fieldRef:214 fieldPath: metadata.namespace215 - name: EVENT_QUEUE_DEPTH216 value: "5000"217 volumeMounts:218 - name: run219 mountPath: /run/flannel220 - name: flannel-cfg221 mountPath: /etc/kube-flannel/222 - name: xtables-lock223 mountPath: /run/xtables.lock224 volumes:225 - name: run226 hostPath:227 path: /run/flannel228 - name: cni-plugin229 hostPath:230 path: /opt/cni/bin231 - name: cni232 hostPath:233 path: /etc/cni/net.d234 - name: flannel-cfg235 configMap:236 name: kube-flannel-cfg237 - name: xtables-lock238 hostPath:239 path: /run/xtables.lock240 type: FileOrCreate

vim kube-flannel.yml

#修改net-conf.json下面的网段为上面初始化master pod-network-cidr的网段地址sed -i 's/10.244.0.0/10.240.0.0/' kube-flannel.yml#执行kubectl apply -f kube-flannel.yml#执行查看安装的状态 kubectl get pods --all-namespaces#查看集群的状态是否为readykubectl get nodes

===补充卸载flannel================

1、在master节点,找到flannel路径,删除flannel

kubectl delete -f kube-flannel.yml

2、在node节点清理flannel网络留下的文件

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

rm -f /etc/cni/net.d/*

执行完上面的操作,重启kubelet

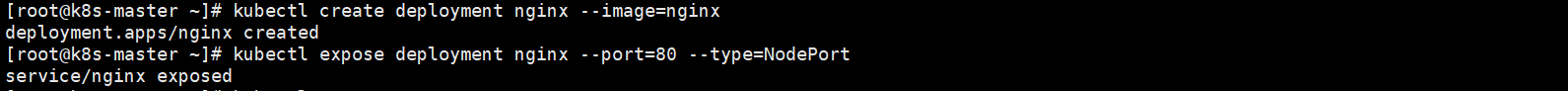

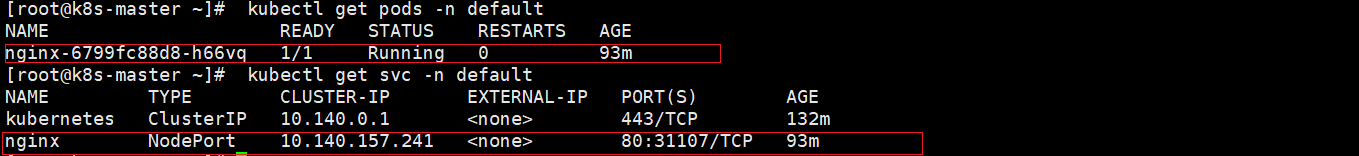

测试kubernetes集群

说明:创建一个pod,开放对外端口访问,这里会随机映射一个端口,不指定ns,会默认创建在default下

kubectl create deployment nginx --image=nginxkubectl expose deployment nginx --port=80 --type=NodePort

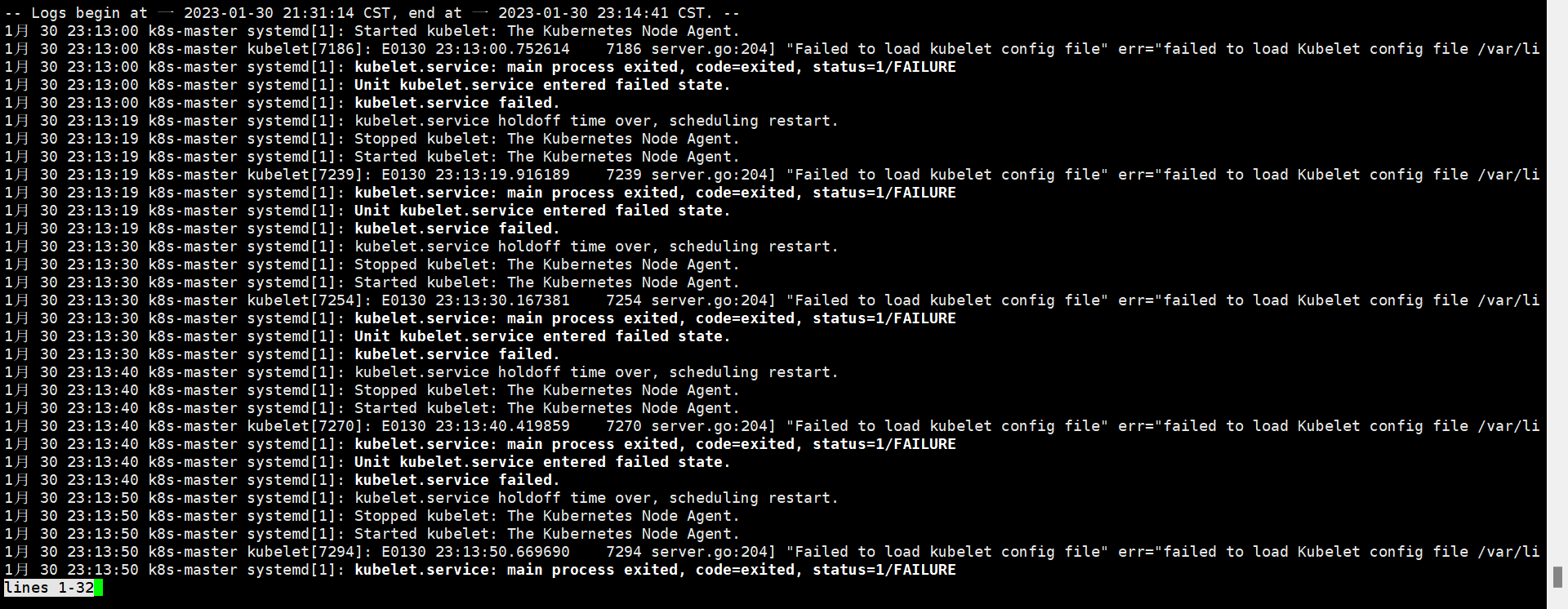

问题总结master节点启动kubelet异常

查看kubelet状态有如下报错属正常现象,正常进行master初始化即可

master初始化问题处理

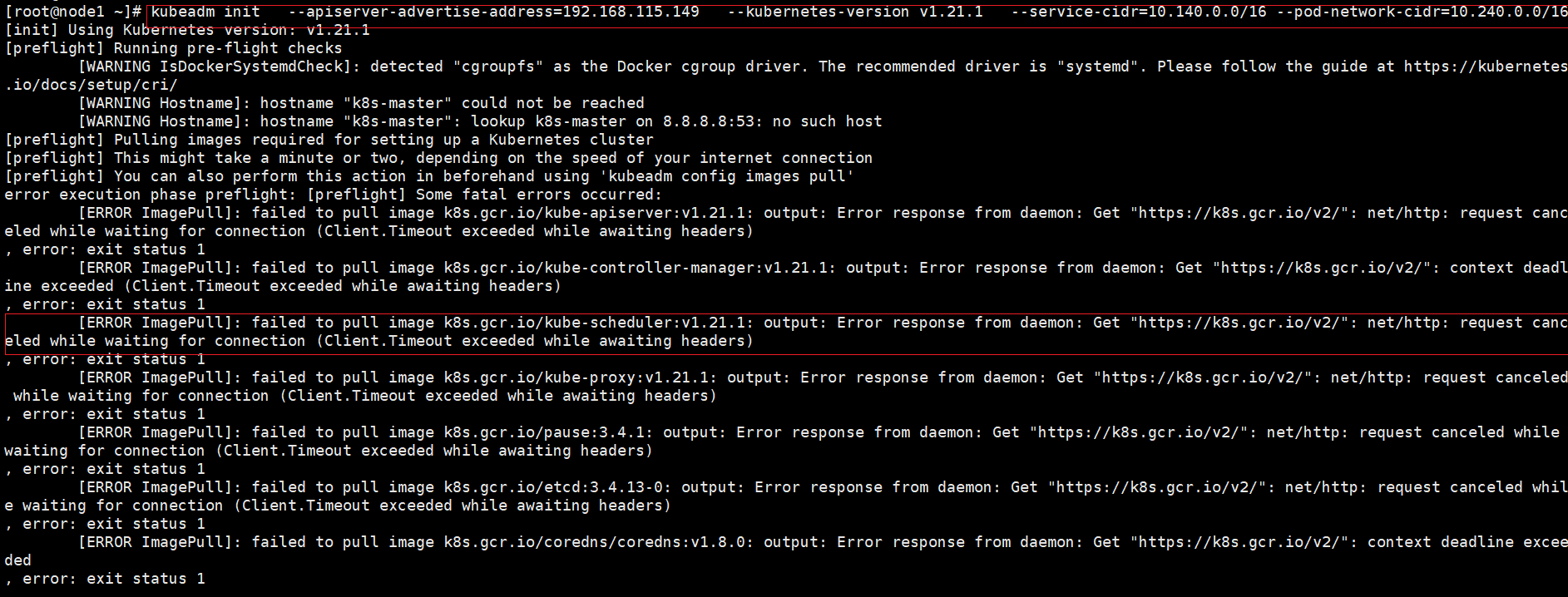

执行kubeadm init –apiserver-advertise-address=192.168.115.149 –kubernetes-version v1.21.1 –service-cidr=10.140.0.0/16 –pod-network-cidr=10.240.0.0/16

报错如下:

原因分析:由于国内网络原因,kubeadm init会卡住不动,一卡就是很长时间,然后报出这种问题,kubeadm init未设置镜像地址,就默认下载k8s.gcr.io的docker镜像,但是国内连不上https://k8s.gcr.io/v2/

解决方案:kubeadm init添加镜像地址,执行kubeadm init –apiserver-advertise-address=192.168.115.149 –image-repository registry.aliyuncs.com/google_containers –kubernetes-version v1.21.1 –service-cidr=10.140.0.0/16 –pod-network-cidr=10.240.0.0/16

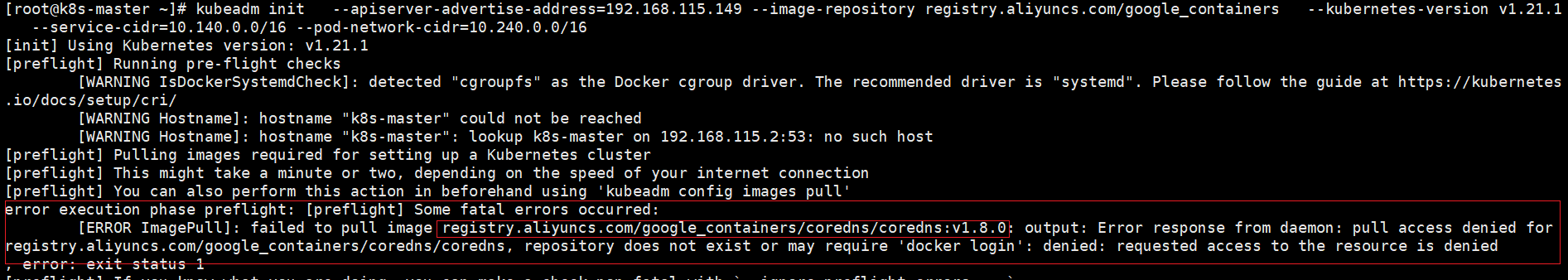

报错如下:

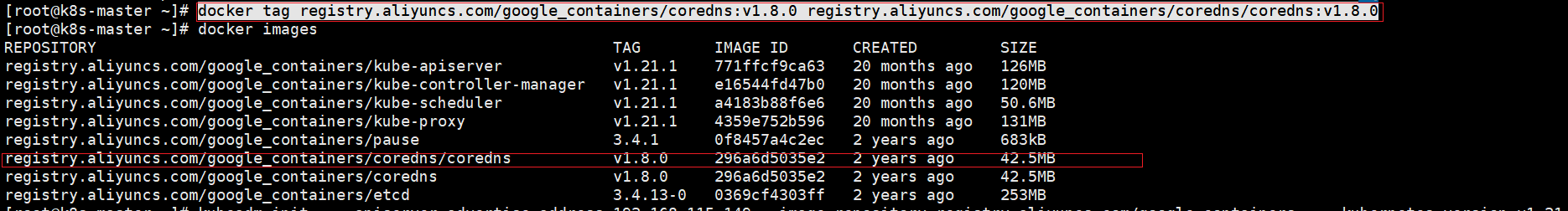

原因分析:拉取 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0镜像失败

解决方案:可查询需要下载的镜像,手动拉取镜像修改tag

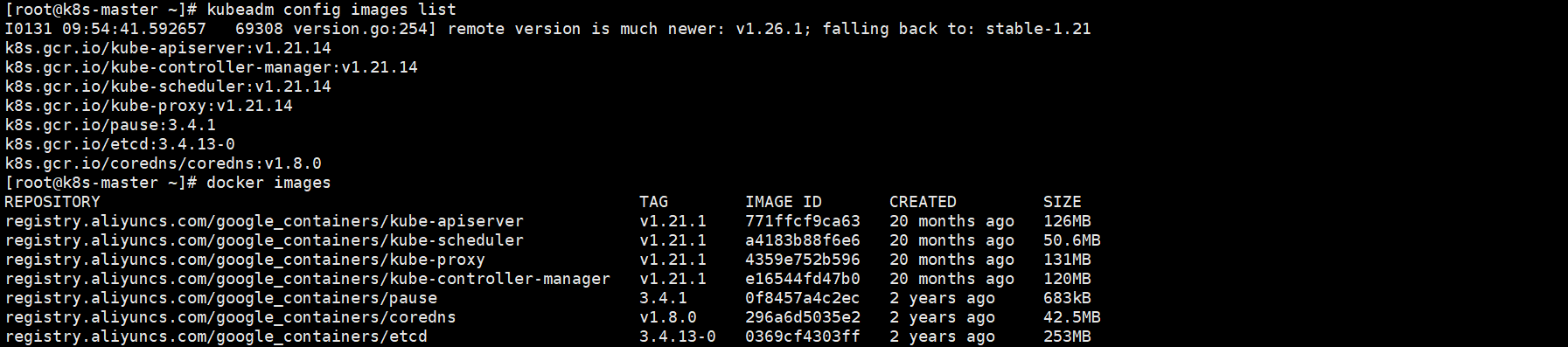

#查询需要下载的镜像 kubeadm config images list

#查询镜像 docker images

发现已经有coredns:v1.8.0镜像但是tag不一样,修改

docker tag registry.aliyuncs.com/google_containers/coredns:v1.8.0 registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

再次执行kubeadm init –apiserver-advertise-address=192.168.115.149 –image-repository registry.aliyuncs.com/google_containers –kubernetes-version v1.21.1 –service-cidr=10.140.0.0/16 –pod-network-cidr=10.240.0.0/16

成功!!!!!

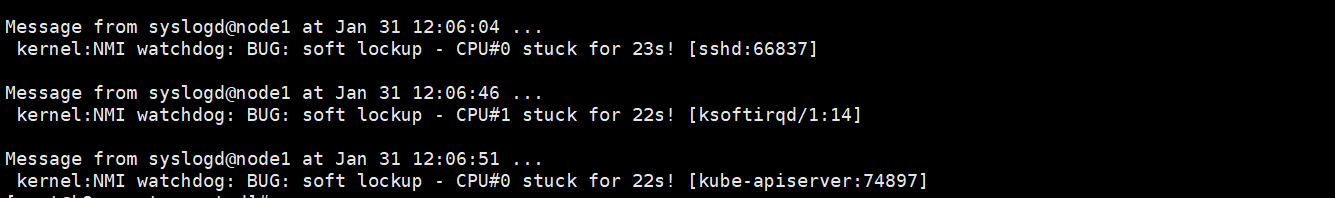

master初始化成功记录 master初始化成功记录kernel:NMI watchdog: BUG: soft lockup – CPU#1 stuck for 22s! [ksoftirqd/1:14]

master初始化成功记录kernel:NMI watchdog: BUG: soft lockup – CPU#1 stuck for 22s! [ksoftirqd/1:14]

大量高负载程序,造成cpu soft lockup。

Soft lockup就是内核软死锁,这个bug没有让系统彻底死机,但是若干个进程(或者kernel thread)被锁死在了某个状态(一般在内核区域),很多情况下这个是由于内核锁的使用的问题。

https://blog.csdn.net/qq_44710568/article/details/104843432

https://blog.csdn.net/JAVA_LuZiMaKei/article/details/120140987